Experiments

Introduction

Experiments make up an optional feature in segmentation that allows users to test the performance of various parts of the segmentation system. Experiments consist solely of a name and a list of targeting actions.

The key difference between the list of actions on an experiment and the list of actions on a segment is that in an experiments only one action is selected and triggered out of the list during segmentation.

Targeting Actions

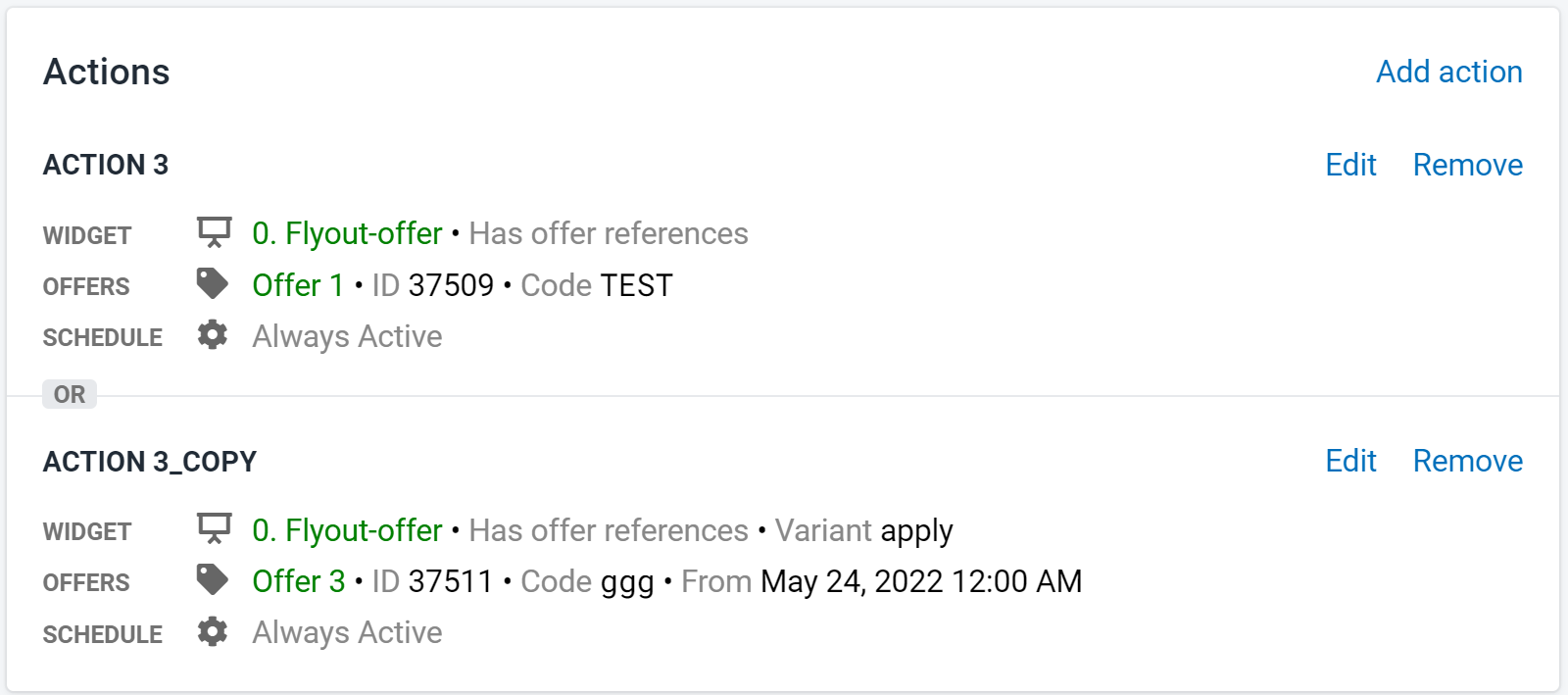

An experiment can have any number of actions, but to fully utilize the feature, an experiment should include more than one valid action. An experiment with no valid actions at the time of segmentation will be ignored.

Figure 1: Actions on Experiment

Figure 1: Actions on Experiment

When an experiment is triggered as part of a segment, the system will select one of its actions to use. The selection process is based on a round-robin system that is designed to use each valid action an equal number of times. If an experiment is triggered several times during one session, the system will continue using the same action that it selected whenever the experiment was first triggered.

There is no requirement as to the relationship between the actions set on an experiment. They do not need to share any attributes or be related in any way. However, doing so is often advantageous. See the Experimentation Strategies below for more information.

Experimentation Strategies

As stated above, experiments can have any number of actions attached with any particular settings. However, the ideal way to use experiments would be to isolate a specific aspect of an action to test. This is done by creating two or more actions that are identical except for exactly one setting. The changed setting can be considered the variable to test, with everything else being constants. Any setting on an action can be used as the variable, but the most common are the widget, variant, and offer.

Below are some explanations of common testing strategies. For the following section, assume we have an experiment with two valid actions. That said, these strategies extend to experiments with three or more actions as well.

Remember that all performance related data is available in Insights.

Widget Testing

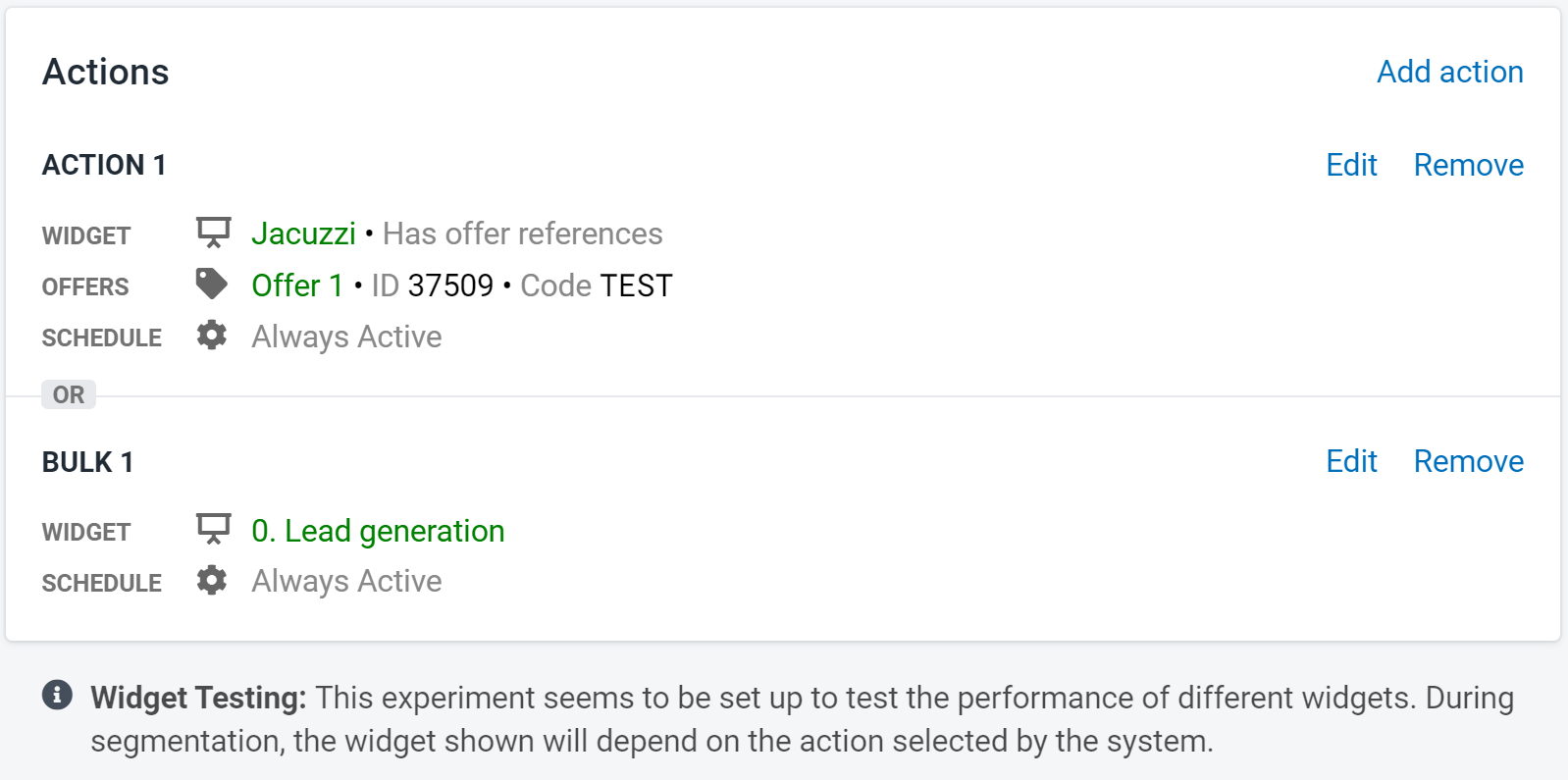

Widget testing occurs when one action references "Widget A" and another action references "Widget B". Ideally, testing a widget would require keeping everything else in both actions identical, but this is often not the case. This is because changing a widget can have significant impact on the available options in the rest of the action. For example, if "Widget B" does not support an offer, then that action could not have offers attached, whereas perhaps "Widget A" will allow offers on its action.

Nevertheless, the situation described involving two separate widgets constitutes widget testing. Widget testing can be useful in determining which widget leads to the most clicks and drives the most revenue.

Figure 2: Widget Testing Example

Figure 2: Widget Testing Example

Variant Testing

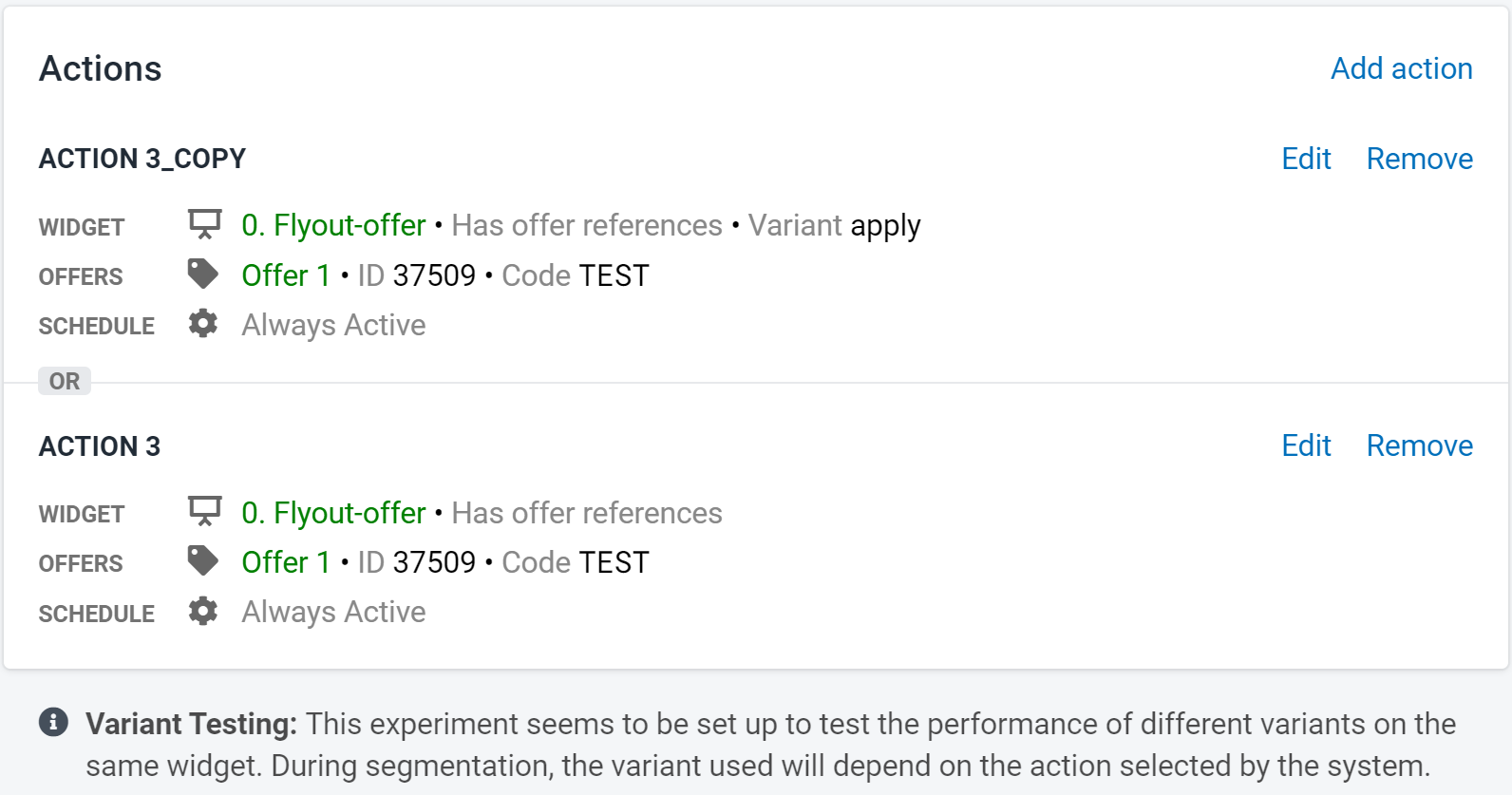

Variant testing occurs when one action references "variant-a" and another action references "variant-b". Different from widgets, setting a variant on an action does not have an impact of the available settings on the rest of the action. This means that it is entirely possible, and advised, that the two actions are identical besides the variant. It is also acceptable to have one action use the default variant instead of a defined variant.

Variant testing can be used to determine the performance of variants inside a particular widget. For example, a more brightly-colored widget background could lead to more clicks. Variant testing would be an excellent way to decide.

Figure 3: Variant Testing Example

Figure 3: Variant Testing Example

Offer Testing

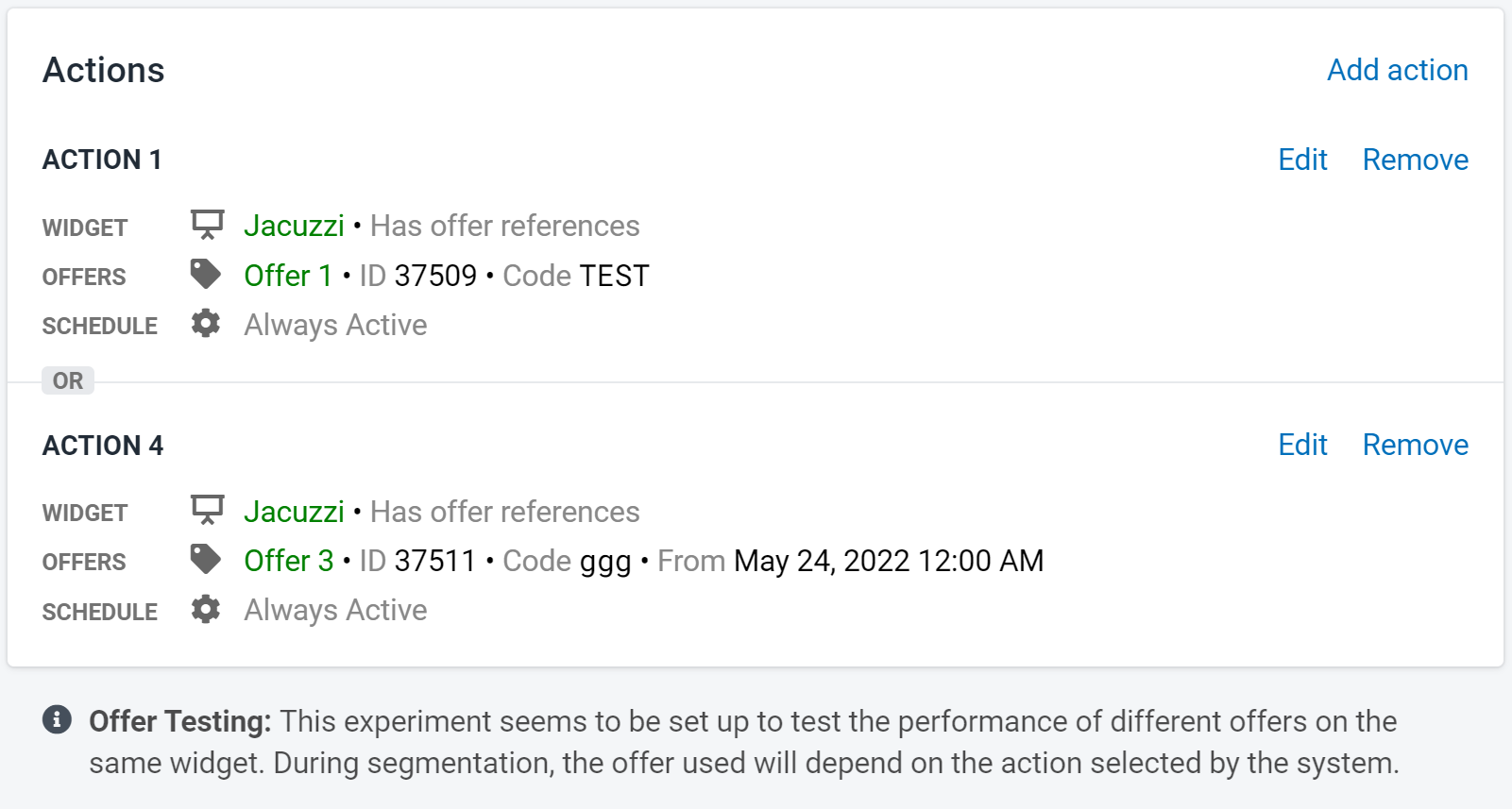

Offer testing occurs when one action references "Offer A" and another action references "Offer B". Similar to variants, the selected offer should not impact the rest of the action's available settings. This means that the two actions should be identical besides their active offer. Of course, offer testing is only available if the actions refer to a widget the supports offers.

Offer testing is perhaps the most common form of experiment testing and is an easy way to see which offer generates the most widget clicks or offer code uses.

Figure 4: Offer Testing Example

Figure 4: Offer Testing Example